Power BI is a great tool to visualize data and create effective interactive dashboards and reports. Log Analytics is great to gather and correlate data. Naturally, the two are a great pair. While there isn’t yet a native connector for Log Analytics, you can still pull data by writing a custom M Query in Power BI. Thankfully, with just a couple clicks from Log Analytics, it will generate everything for you so that you don’t need to know M Query to pull data. When doing this, you’ll need to login to Azure using an Organization Account or another interactive login method. But what if you need to have a report that doesn’t require you to login every time? Using an App Registration and authenticating via OAuth can accomplish this, but how do we do that in PowerBI?

Security Disclaimer

The method that I’m going to show you stores the API Key in the dataset/query. This is meant as more of a PoC than something that you would use in any sort of a production environment unless you can protect that data. If you’re putting this report on a PowerBI server, you’ll want to make sure people cannot download a copy of the report file as that would allow them to obtain the API key.

Setup

Before we try to query Log Analytics from Power BI with OAuth, we need to setup an App Registration.

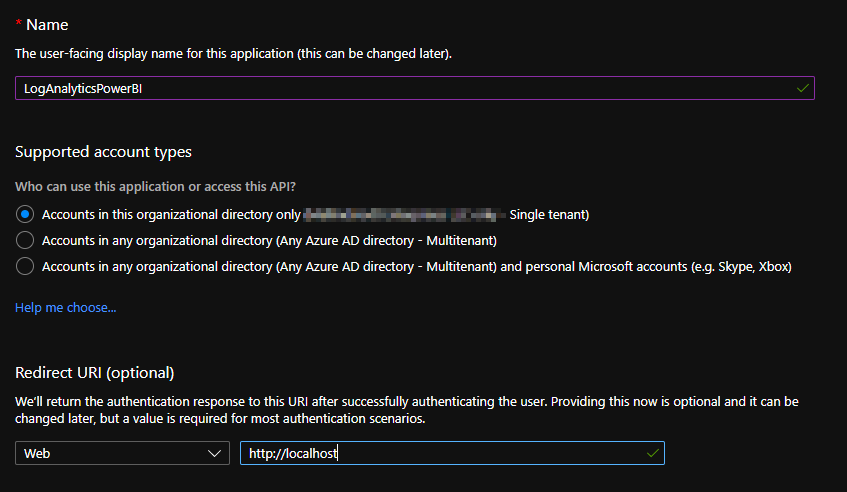

- Log into Azure and open App Registrations either from the main portal or via Azure Active Directory. Click on the New Registration button, give it a name and a Redirect URI.

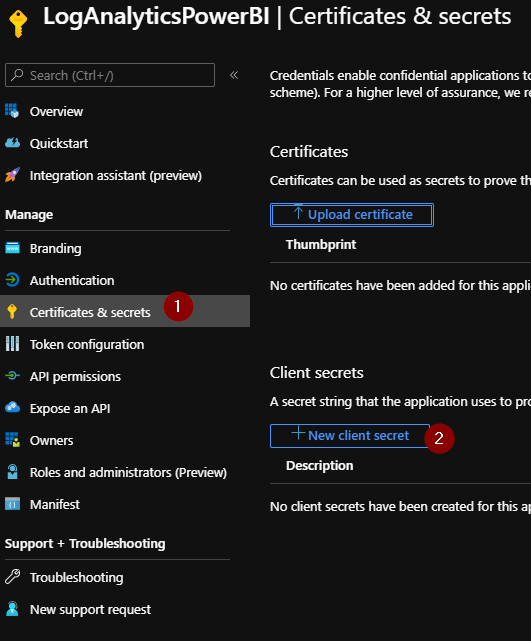

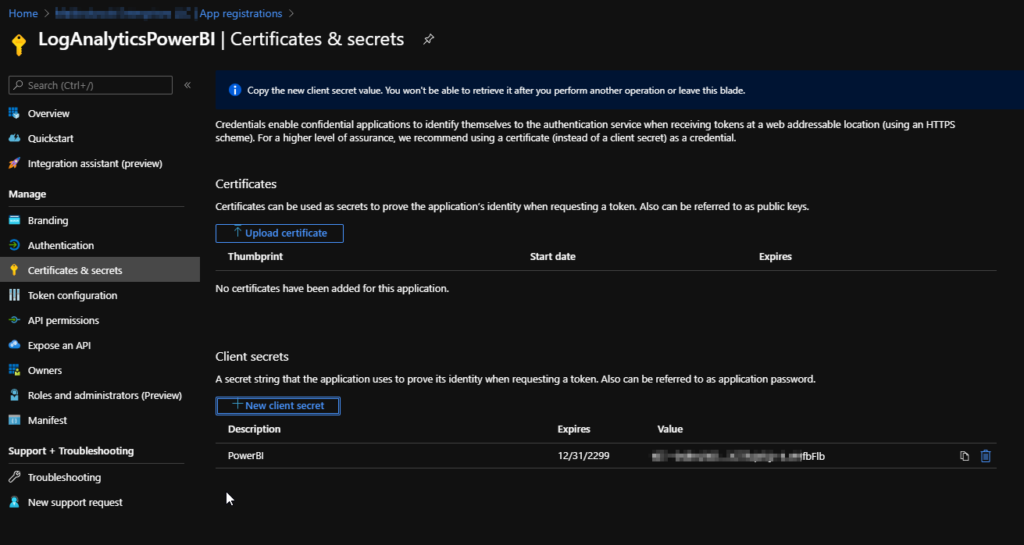

2. Next, generate a new client secret.

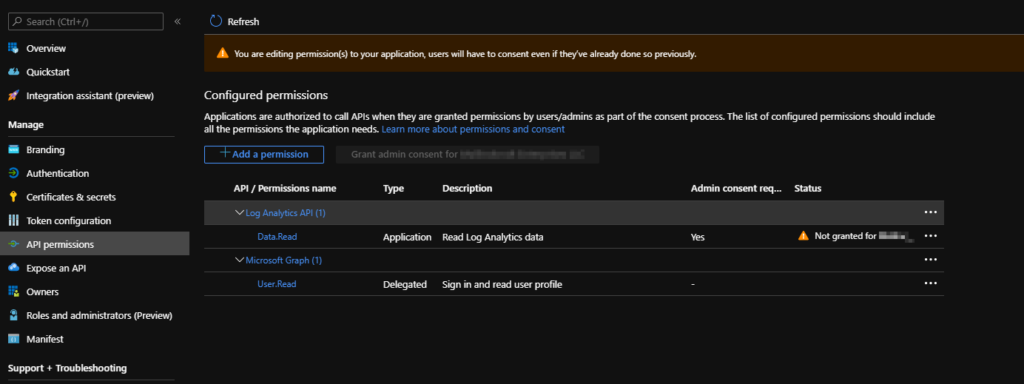

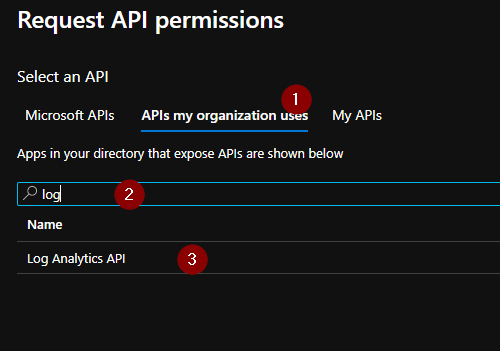

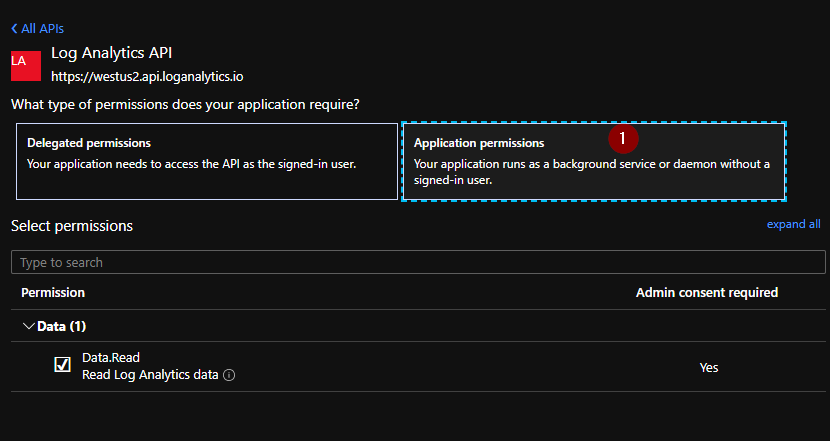

3. Now we need to grant access to the Log Analytics API (Data.Read). To do this, click Add Permission select “APIs my Organization Uses” and search for Log Analytics.

Once you’ve added the permission, you need to grant admin consent to the API to interact on the users behalf.

We now have the App Registration almost ready to go. What we’ve done is grant the App Registration the ability to query the Log Analytics API. What we haven’t don yet is grant it access to our Log Analytics workspaces. We’ll take care of that next.

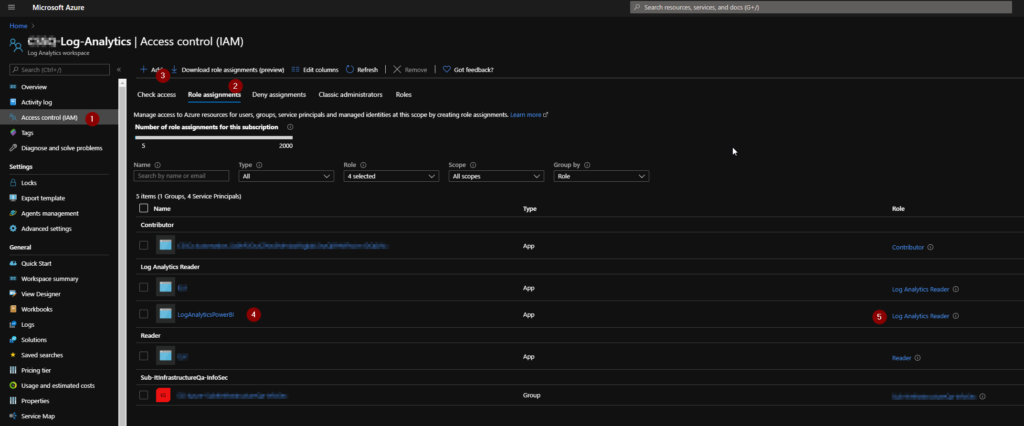

4. Browse to your Log Analytics workspace. We’ll need to add our App Registration to the Log Analytics Reader role. This will grant allow the App Registration the ability to query any table within this workspace. If you want to limit the tables that the app registration is able to query, you will need to define a custom role and assign them to that role instead. I won’t cover creating a custom role in this post, but you can read about how to create a custom role here and see a list of all the possible roles here. You may also want to read through this article to read about the schema for the JSON file that makes up each custom role.

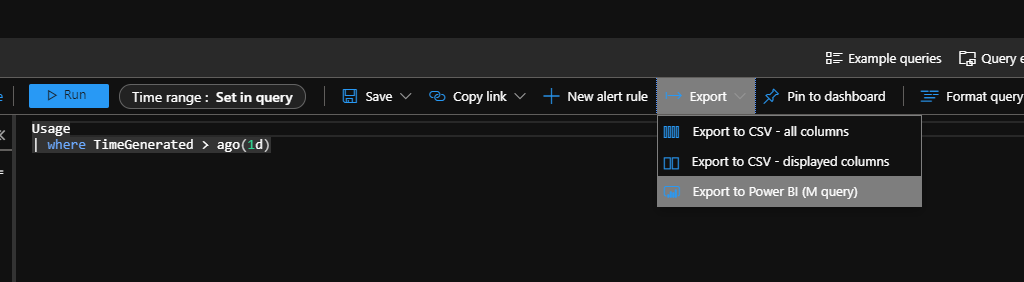

5. Now that the permissions have been granted, let’s run a query. Once you have some results, click on Export and then Export to Power BI (M query). This will download a text file that contains the query. Go ahead an open it up.

The text file will look something like this. If we were to put this into Power BI as is, we could get our data after authenticating with our Organization Account. We’ll take the output and customize it just a bit.

let AnalyticsQuery =

let Source = Json.Document(Web.Contents("https://api.loganalytics.io/v1/workspaces/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/query",

[Query=[#"query"="Usage

| where TimeGenerated > ago(1d)

",#"x-ms-app"="OmsAnalyticsPBI",#"prefer"="ai.response-thinning=true"],Timeout=#duration(0,0,4,0)])),

TypeMap = #table(

{ "AnalyticsTypes", "Type" },

{

{ "string", Text.Type },

{ "int", Int32.Type },

{ "long", Int64.Type },

{ "real", Double.Type },

{ "timespan", Duration.Type },

{ "datetime", DateTimeZone.Type },

{ "bool", Logical.Type },

{ "guid", Text.Type },

{ "dynamic", Text.Type }

}),

DataTable = Source[tables]{0},

Columns = Table.FromRecords(DataTable[columns]),

ColumnsWithType = Table.Join(Columns, {"type"}, TypeMap , {"AnalyticsTypes"}),

Rows = Table.FromRows(DataTable[rows], Columns[name]),

Table = Table.TransformColumnTypes(Rows, Table.ToList(ColumnsWithType, (c) => { c{0}, c{3}}))

in

Table

in AnalyticsQuery

6. Using OAuth is a two step approach. This is commonly known as Two-Legged OAuth where we first retrieve a token, and then use that token to execute our API calls. To get the token, add the following after the first line:

let ClientId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

ClientSecret = Uri.EscapeDataString("xxxxxxxxxxxxx"),

AzureTenant = "xxxxxx.onmicrosoft.com",

LogAnalyticsQuery = "Usage

| where TimeGenerated > ago(1d)

",

OAuthUrl = Text.Combine({"https://login.microsoftonline.com/",AzureTenant,"/oauth2/token?api-version=1.0"}),

Body = Text.Combine({"grant_type=client_credentials&client_id=",ClientId,"&client_secret=",ClientSecret,"&resource=https://api.loganalytics.io"}),

OAuth = Json.Document(Web.Contents(OAuthUrl, [Content=Text.ToBinary(Body)])),

This bit will setup and fetch the token. I’ve split out the Log Analytics query to make it easier to reuse the bit of code for additional datasets. Obviously, you’ll want to put your ClientId, ClientSecret, and Azure Tenant on the first three lines. After that, you’ll want to edit the source line. Change it from:

let Source = Json.Document(Web.Contents("https://api.loganalytics.io/v1/workspaces/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/query",

[Query=[#"query"="Usage

| where TimeGenerated > ago(1d)

",#"x-ms-app"="OmsAnalyticsPBI",#"prefer"="ai.response-thinning=true"],Timeout=#duration(0,0,4,0)])),

to something like this:

Source = Json.Document(Web.Contents("https://api.loganalytics.io/v1/workspaces/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/query",

[Query=[#"query"=LogAnalyticsQuery,#"x-ms-app"="OmsAnalyticsPBI",#"prefer"="ai.response-thinning=true"],Timeout=#duration(0,0,4,0),Headers=[#"Authorization"="Bearer " & OAuth[access_token]]])),

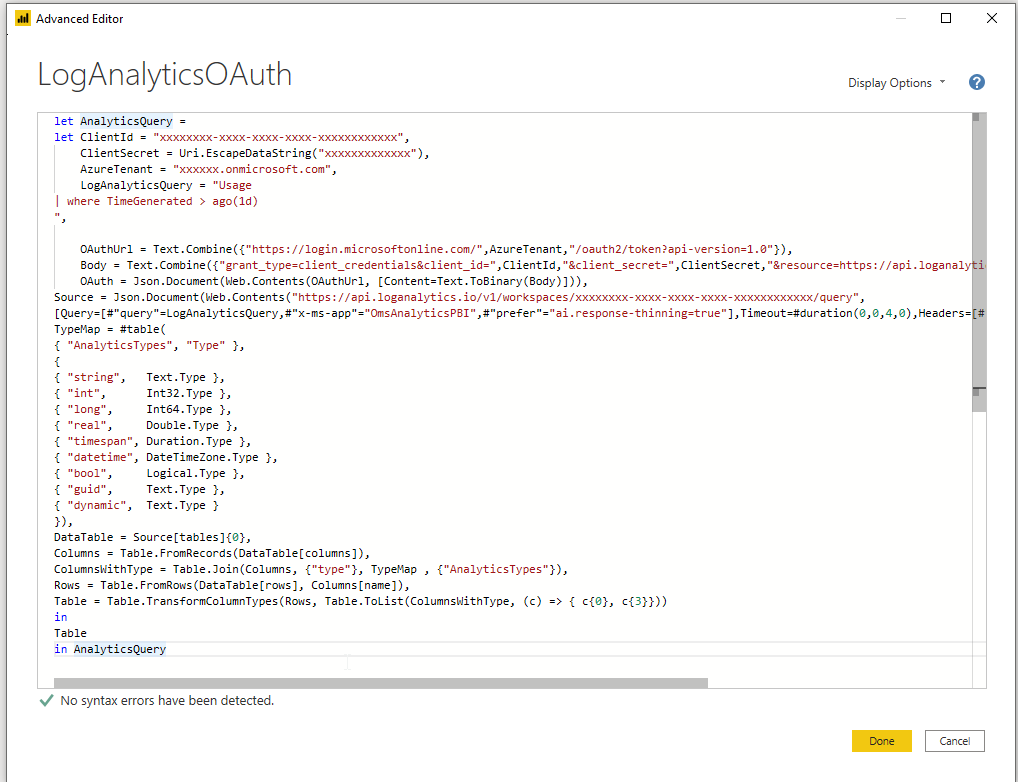

You may notice that we did two things. First, we changed the query to use our variable instead of the text itself. This is done purely to make it easier to re-use the code for additional datasets. The other thing we’ve done is add the Authorization header to the request using the token we obtained from the OAuth line. You should now have something like this:

let AnalyticsQuery =

let ClientId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

ClientSecret = Uri.EscapeDataString("xxxxxxxxxxxxx"),

AzureTenant = "xxxxxx.onmicrosoft.com",

LogAnalyticsQuery = "Usage

| where TimeGenerated > ago(1d)

",

OAuthUrl = Text.Combine({"https://login.microsoftonline.com/",AzureTenant,"/oauth2/token?api-version=1.0"}),

Body = Text.Combine({"grant_type=client_credentials&client_id=",ClientId,"&client_secret=",ClientSecret,"&resource=https://api.loganalytics.io"}),

OAuth = Json.Document(Web.Contents(OAuthUrl, [Content=Text.ToBinary(Body)])),

Source = Json.Document(Web.Contents("https://api.loganalytics.io/v1/workspaces/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/query",

[Query=[#"query"=LogAnalyticsQuery,#"x-ms-app"="OmsAnalyticsPBI",#"prefer"="ai.response-thinning=true"],Timeout=#duration(0,0,4,0),Headers=[#"Authorization"="Bearer " & OAuth[access_token]]])),

TypeMap = #table(

{ "AnalyticsTypes", "Type" },

{

{ "string", Text.Type },

{ "int", Int32.Type },

{ "long", Int64.Type },

{ "real", Double.Type },

{ "timespan", Duration.Type },

{ "datetime", DateTimeZone.Type },

{ "bool", Logical.Type },

{ "guid", Text.Type },

{ "dynamic", Text.Type }

}),

DataTable = Source[tables]{0},

Columns = Table.FromRecords(DataTable[columns]),

ColumnsWithType = Table.Join(Columns, {"type"}, TypeMap , {"AnalyticsTypes"}),

Rows = Table.FromRows(DataTable[rows], Columns[name]),

Table = Table.TransformColumnTypes(Rows, Table.ToList(ColumnsWithType, (c) => { c{0}, c{3}}))

in

Table

in AnalyticsQuery

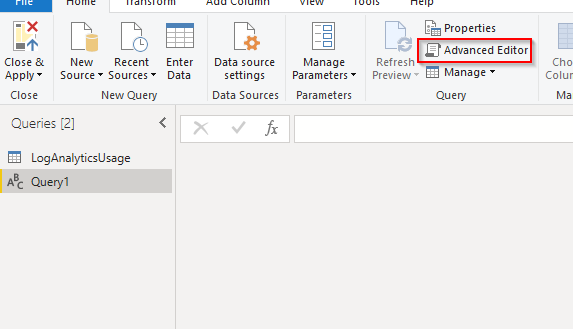

Let’s put that into our query in Power BI. Open Power BI, create a new Blank Query and open the Advanced Editor.

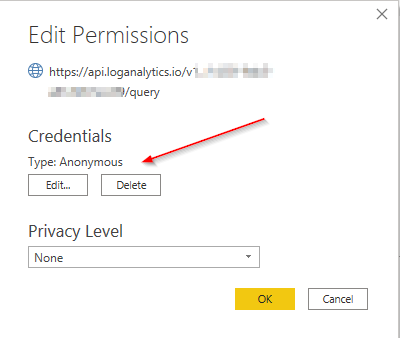

One last thing we will need to do is set our Authentication method to Anonymous. You should be prompted to do so after clicking done, but if not, or you got click happy and dismissed it, you can click on Data Source Settings -> Edit Permissions. If you already have other data sources in your report, be sure to select the one that we just created and change the credentials to Anonymous.

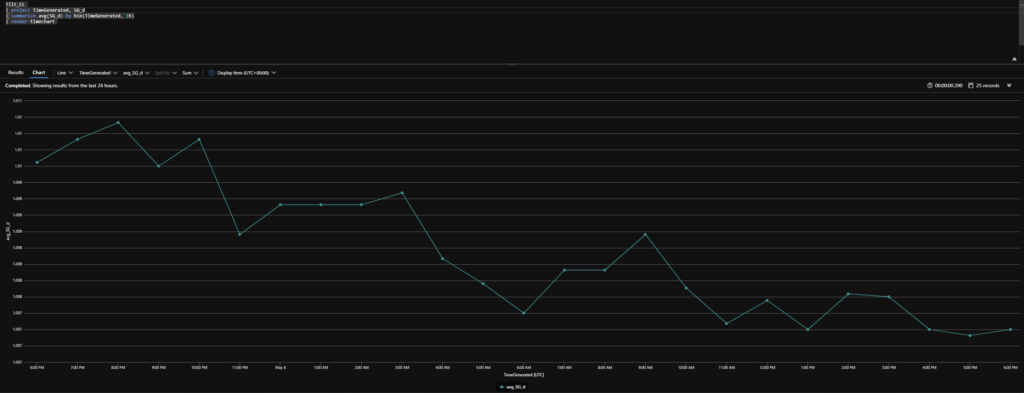

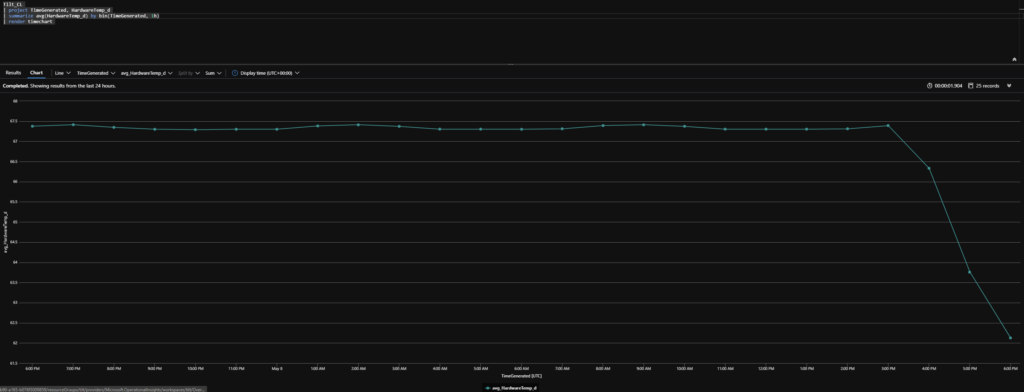

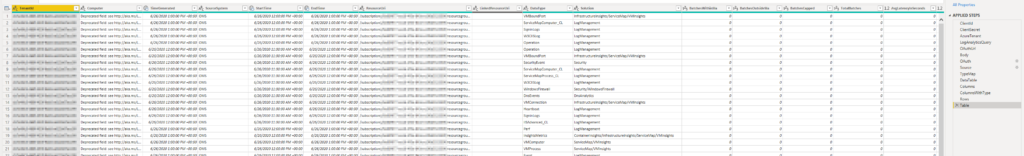

We should now have a preview of our data

Now, if we need to add additional queries, it’s as simple as duplicating the dataset and changing the LogAnalyticsQuery variable on that dataset to the new query.